With ChatGPT or Copilot, you can use very powerful AI assistants thanks to the underlying large language models. The downside is that with the free version, your conversations are used for training purposes. If you want a bit more privacy, it could be a good option to run a large language model locally. We’ll show you how to do that with Ollama.

A ’large language model’ is a term in AI for a model that’s trained on a vast amount of text. This allows the LLM to generate text and serve as an AI assistant. More and more large language models are becoming available. ChatGPT, of course, is very popular and making a lot of progress with new models like GPT 4o and o1. But Meta, Anthropic, and Google are also working on their own LLMs and thus their own AI assistants.

Some of these models can be run locally, albeit in a trimmed-down form. This way, all the data you enter stays private, and you won’t experience delays if the servers get too busy. Additionally, you can ask an unlimited number of questions, unlike the limits imposed by online services.

Before getting started, it’s essential that your PC has at least 8 GB of RAM. This will allow you to run the smaller models locally. If you also want to run slightly larger models, you’ll need at least 16 GB.

Installing and Using Ollama

In this how-to, we’ll be using Ollama, which gives you the option to choose from AI models from Google, Meta, and Microsoft, among others. You can download Ollama from the website for Windows, macOS, or Linux. We’ll proceed with Windows. Click the download button in the middle of the website and then click Download for Windows (Preview). Run the downloaded file to start the installation. Click Install to begin the installation.

The installation will complete automatically, and Ollama will start in the background. Click the Windows notification at the bottom right that says Click here to get started to begin using Ollama. This will open a command prompt with instructions on how to install a model. If you missed the notification or nothing happens, don’t worry. Right-click the Windows button and select Terminal or Command Prompt.

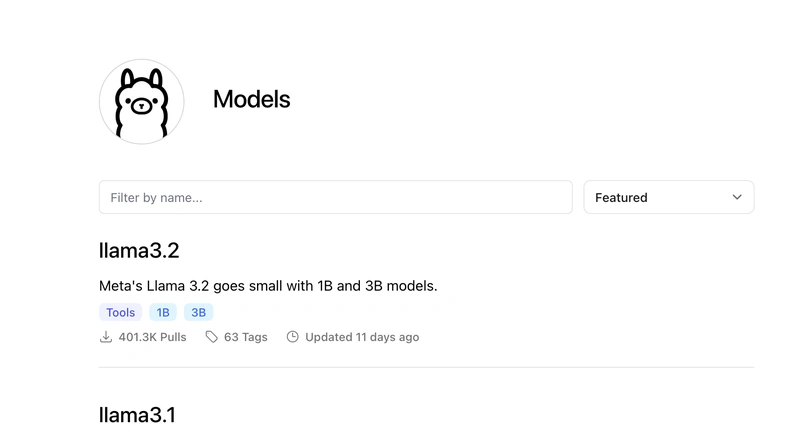

To install an LLM, type ollama run . For example, if you want to use Meta’s Llama 3.1, type ollama run llama3.1. Ollama will begin downloading and installing the model, and you can then start chatting with the LLM, just like you would with ChatGPT or Copilot. However, the chatting takes place within the terminal.

Downloading the model may take some time. Llama 3.1, for instance, is 4.7 GB in size. Once downloaded, you can start chatting immediately. Type a message and press Enter to send it.

If you want to start a new chat, type /clear. To save your session, type /save <name>. You can then retrieve your conversation later and continue chatting by typing /load <name>. Type /bye to close Ollama and /? for an overview of all available commands.

In addition to Llama 3.1, other models are available in Ollama. For example, you can use Google’s Gemma, Alibaba’s Qwen, Microsoft’s Phi, and Nvidia’s Nemotron. You can find the full list of available models on Ollamas website. To use a model, type ollama run <model name> in a terminal. If you want to remove a model to free up space, type ollama rm <model name>. Type ollama list to see all the installed LLMs.

Ollama with Images

Sometimes, you may want to input images into your LLM. This is also possible with Ollama. To do this, you’ll first need to use a model that can handle images. You can use llava for this. Open a terminal and type: ollama run llava:7b. Wait while the model downloads; it’s 4.1 GB in size. By the way, the 7b refers to the model size, in this case, 7 billion parameters. The more parameters, the smarter the model. You can also download Llava with 13 billion parameters or 34 billion parameters, but the model size grows to 7.4 GB or 19 GB, respectively.

After downloading, you can input an image using the following command: ollama run llava and then describe this image: C:/Users/

Conclusion

In this howto, we’ve shown you how to install Ollama and start using it. We’ve also shown you the commands you can use for your chats, how to install and use other LLMs, and how to work with images in Ollama.